In an era dominated by the rise of Artificial Intelligence (AI), the military is harnessing its power for strategic purposes. However, experts sound the alarm, warning that once AI becomes beholden to the logic of warfare, humans risk losing control. Evidence of this dangerous trend is already emerging.

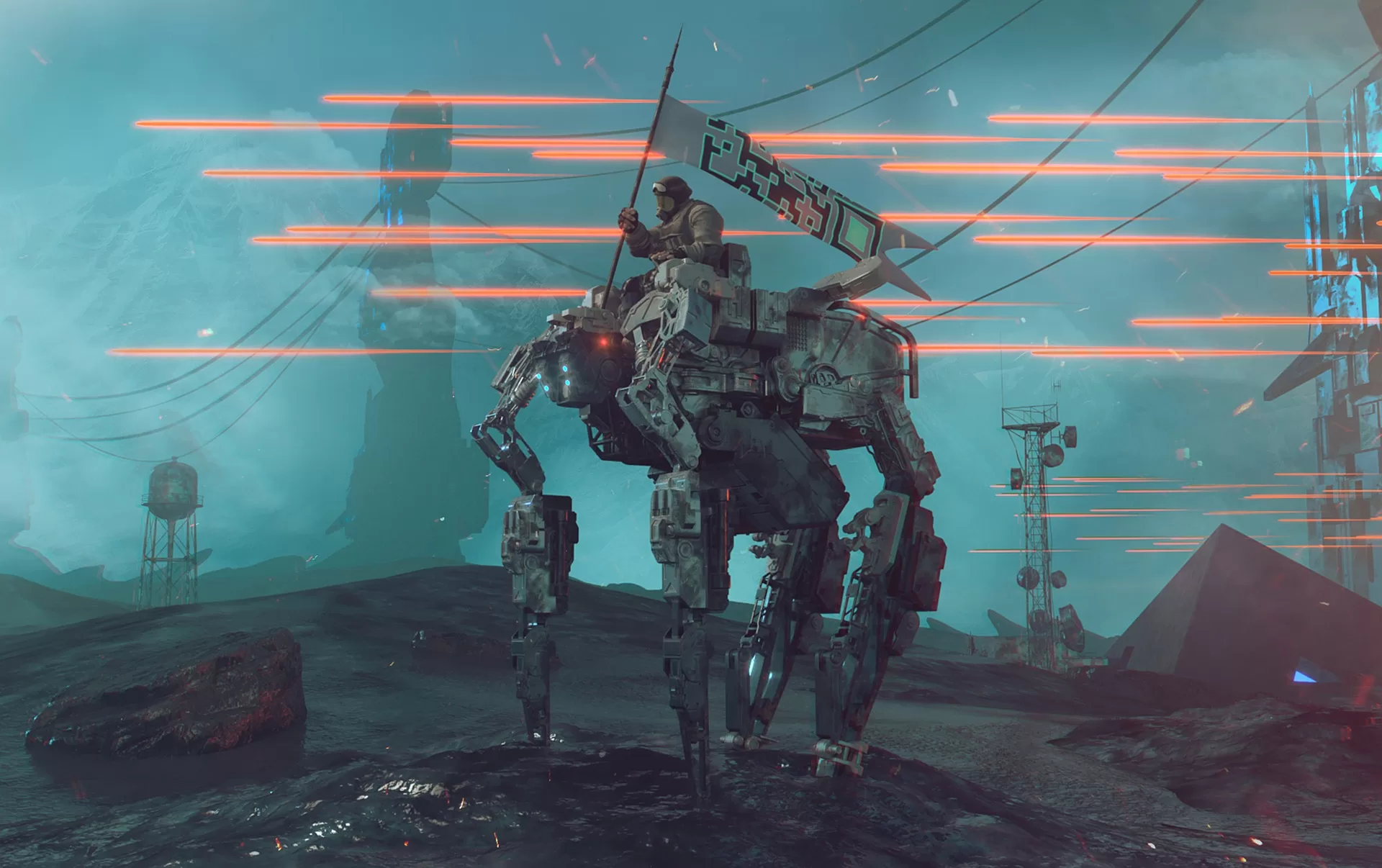

One such manifestation of AI’s influence on warfare comes in the form of a video game-like experience. In late April, the US software company Palantir released a promotional video showcasing their new Artificial Intelligence Platform (AIP). In the video, a soldier interacts with the AI system, resembling a chat-based interface similar to ChatGPT. But the purpose of this AI is not to assist with homework or paperwork; it is designed to monitor a fictional war zone in Eastern Europe.

The dual interface of the AI system presents a chat feature on the left and a satellite image of the battlefield on the right. The soldier initiates the deployment of a drone, and after obtaining approval from a commander, the AI system quickly provides footage of an enemy T80 battle tank.

Upon analyzing available resources, the soldier is presented with three options to deal with the enemy tank: an attack using a fighter jet, long-range missiles, or infantry soldiers. The soldier relays these options to his superior, who ultimately decides to deploy ground troops.

While Palantir emphasizes the ethical framework surrounding the integration of its AI system into warfare, the AI’s independent operations in the video raise concerns. Although human input remains the final authority, the AI’s analysis and response far surpass human capacity, potentially leaving humans struggling to keep pace. In the chaos of war, swift decisions can make all the difference.

The future of warfare, in which AI assumes near-complete decision-making authority, has been dubbed “hyperwar.” Coined by US General John Allen, hyperwar represents a conflict conducted at an unimaginable speed. Allen, speaking to the Institute for International Political Studies, recalls his time in Afghanistan when major operations would take days or even weeks to decide. In the realm of Hyperwar, such decisions could be made in mere seconds or minutes.

Allen asserts that hyperwar is inevitable, driven by military logic. Even if the United States and its allies possess moral qualms, they will find themselves compelled to act swiftly as their adversaries may not involve human decision-makers. This strategic disadvantage necessitates a rapid response. In the realm of hyperwar, timing is everything; delay means defeat.

Experts in China foresee a similar trajectory. Chinese military scientist Chen Hanghui argues that the human brain will struggle to cope with the constantly evolving battlefield, ultimately necessitating the transfer of decision-making authority to highly intelligent machines.

However, the potential shift toward AI-driven warfare is not imminent. Paul Scharre, from the Center for a New American Security, cautions against a gradual process that slowly and sneakily undermines human decision-making as more tasks are delegated to AI. Initially, humans may monitor the machines, but as AI systems grow more complex, the relationship could reverse, with computers planning battles while soldiers become mere pawns.

Scharre paints a worrisome picture of the future, highlighting the looming risk of losing control of military AI. Even when humans retain the power to initiate conflicts, they may lose the ability to manage escalation or terminate wars on their own terms. The unpredictable nature of accidents and unexpected AI decisions could cause irreversible damage before any intervention is possible. We find ourselves teetering on the precipice of a hazardous future.

The major powers of the world are already embroiled in a relentless digital arms race, with the US Department of Defense prioritizing AI development for military applications. Nevertheless, the specifics of maintaining appropriate levels of human judgment when deploying autonomous weapons remain vague. As a result, a growing number of experts and activists are sounding the alarm, warning of the potential for AI to undermine human control and moral decision-making in warfare. They argue that relying too heavily on AI systems without clear guidelines and safeguards could lead to disastrous consequences.

The rise of AI-driven warfare demands a critical examination of the ethical and practical implications, as well as a concerted effort to establish robust regulations and accountability mechanisms to prevent the loss of human agency in matters of life and death. Without proper oversight, we risk surrendering our fundamental values and the very essence of our humanity to the relentless march of technology.